Generative Moment Matching Networks

- DR.GEEK

- Dec 16, 2020

- 1 min read

(16th-December-2020)

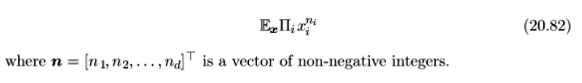

• Generative moment matching networks( , ; , Li et al. 2015 Dziugaite et al. 2015) are another form of generative model based on differentiable generator networks. Unlike VAEs and GANs, they do not need to pair the generator network with any other network—neither an inference network as used with VAEs nor a discriminator network as used with GANs. These networks are trained with a technique called moment matching. The basic idea behind moment matching is to train the generator in such a way that many of the statistics of samples generated by the model are as similar as possible to those of the statistics of the examples in the training set. In this context, a moment is an expectation of different powers of a random variable. For example, the first moment is the mean, the second moment is the mean of the squared values, and so on. In multiple dimensions, each element of the random vector may be raised to different powers, so that a moment may be any quantity of the form

• When generating images, it is often useful to use a generator network that includes a convolutional structure (see for example Goodfellow 2014c Dosovitskiy et al. ( ) or et al. ( )). To do so, we use the “transpose” of the convolution operator, 2015 described in section . This approach often yields more realistic images and does 9.5 so using fewer parameters than using fully connected layers without parameter sharing. Convolutional networks for recognition tasks have information flow from the image to some summarization layer at the top of the network, often a class label.

Comments